In the vibrant suburb of Pitipana, just a

stone's throw away from the bustling city of Colombo, lies the nerve center of

Sri Lanka's digital landscape—the SLT Data Center. Embarking on a recent field

visit to this facility was like stepping into the nation's technological

infrastructure. It has opened a 500-rack, The new Data Centre in Pitipana is

constructed as a 3 storied building spanning an area of 2 Acres. The new DC

also provides competent infrastructure for the requirements of Enterprise

Customers Disaster Recovery (DR).

Why does it locate on Pitipana?

Choosing the right location for a data

center involves various factors. The geographic location must balance proximity

to users with considerations for natural disaster risks. Without any flood risk

is the main fact they consider the Pitipana. Accessibility via robust

transportation infrastructure is crucial for equipment delivery and

maintenance. Basically, they consider Four Lane Roads as transport facilities. The

Data Centre is 8.4 km from the nearest expressway exit. Arriving directly from

the Bandaranaike International Airport, investors or business travelers can

reach the Data Center via the expressway in as little as 45 minutes. Power

availability and reliability, including redundancy measures, play a pivotal

role in uninterrupted operations. Total 2.5MW power consumed by Data center. Climate

considerations impact cooling requirements, with an eye on energy efficiency to

minimize environmental impact.

Tier III data center

SLTMobitel, the proud pioneer of data

center services in the country, introduces state-of-the-art facilities that

align with global standards. With certifications including Uptime Data Center

Tier, Green Gold, ISO 27001 for information security, and ISO 9001 for Quality

Management System, SLTMobitel ensures excellence in its data center offerings.

Your IT workload will be co-located with purpose in a Tier III data center,

reflecting our commitment to providing top-tier services.

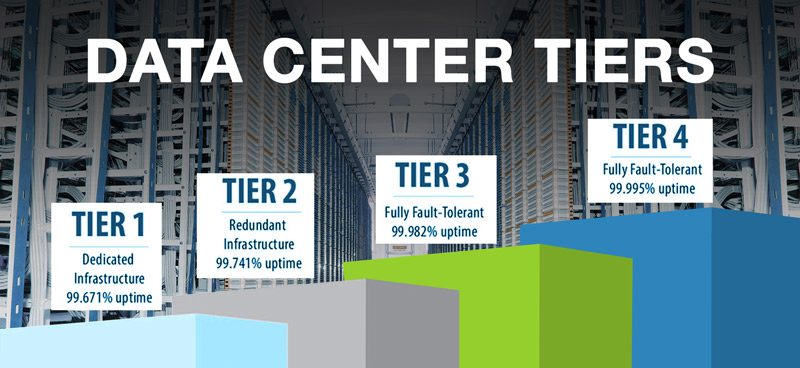

Data centers are classified into tiers

based on the Uptime Institute's Tier Classification System, which is widely

recognized in the industry. The system provides a standardized method for

evaluating and classifying data center infrastructure based on its reliability,

redundancy, and availability. The tiers range from Tier I to Tier IV, with each

tier representing a level of resilience and fault tolerance.

Tier I: Basic Capacity

Availability: 99.671%

Tier I data centers have a basic level of

infrastructure with minimal redundancy. They are susceptible to disruptions for

planned maintenance or unexpected events.

Tier II: Redundant Capacity Components

Availability: 99.741%

Tier II data centers have increased

redundancy compared to Tier I, providing some fault tolerance. They include

redundant components for key systems, allowing for maintenance without

downtime.

Tier III: Concurrently Maintainable

Availability: 99.982%

Tier III data centers have multiple,

independent distribution paths for power and cooling, ensuring that equipment

maintenance can be performed without disrupting operations. They offer a higher

level of fault tolerance.

Tier IV: Fault Tolerance

Availability: 99.995%

Tier IV data centers provide the highest

level of reliability and fault tolerance. They have redundant components and

systems, and they can withstand a single point of failure without affecting

operations. Tier IV facilities are designed to handle the most critical and

sensitive operations.

These tiers are not just about reliability;

they also have implications for the design, construction, and operational

procedures of a data center. The higher the tier, the more resilient the data

center is to disruptions and the more costly it is to build and maintain. SLTMobitel

stands out as a leading provider dedicated to meeting the highest industry

standards. The commitment to excellence is evident in the meticulous care taken

with your IT workload, ensuring it is strategically co-located within a Tier

III data center. This designation signifies a facility designed for optimal

reliability, featuring redundant systems and multiple distribution paths to

guarantee operational continuity.

Carrier Neutral Data Center

A Carrier Neutral Data Center (CNDC)

represents a cutting-edge approach to data infrastructure, offering businesses

the freedom to select from a variety of telecommunications carriers without any

exclusive affiliations. Recently SLT has provided this service to Dialog and

Lankacom.

This model fosters a competitive

environment within the facility, encouraging multiple carriers to co-locate

their network equipment. The result is a diverse eco-system where clients can

choose the telecommunications providers that best align with their connectivity

requirements in terms of bandwidth, reliability, and cost-effectiveness.

Within a Carrier Neutral Data Center,

clients benefit from the flexibility to establish direct connections with

various carriers, internet service providers (ISPs), content delivery networks

(CDNs), and other network service providers. The competitive landscape within a

Carrier Neutral Data Center often translates into cost savings for clients.

3+1 redundant UPS system by Schneider Electric

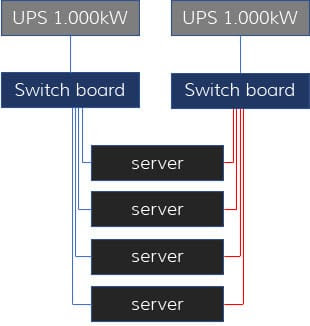

The 3+1 redundant UPS system by Schneider

Electric stands as a pinnacle of reliability and high availability in power

management solutions. In this configuration, three active UPS modules operate

in parallel, collectively supporting the critical load, while an additional

fourth module stands ready as a redundant unit. This design ensures seamless

continuity in the face of module failure or maintenance requirements,

minimizing the risk of power interruptions and fortifying overall system

reliability.

N equals the amount of capacity required to

power or cool the data center facility at full IT load. A design of N means the

facility was designed only to account for the facility at full load and zero

redundancy has been added. If the facility is at full load and there is a

component failure or required maintenance, mission critical applications would

suffer. N is the same as non-redundant.

If N equals the amount needed to run the

data center facility N+1 provides minimal reliability by adding a component to

support a single failure or requirement of that component. Imagine you're

hosting a virtual meeting, and you anticipate having 50 participants. Applying

the N+1 principle here would mean preparing for an additional participant, just

in case more people join the meeting. So, "N" in this scenario

represents the expected number of participants (50), and the additional

participant is the " +1". Therefore, you set up the virtual meeting

for N+1 or 51 participants, ensuring that you're well-prepared for any

unexpected increase in attendance.

Let's consider a scenario where a

manufacturing facility has a total energy demand of 800 megawatts (MW), and

each power generator can handle 400 MW. Following the N+1 redundancy principle,

the facility would require two power generators of 400 MW each (N=800 MW,

N+1=1,200 MW). This redundancy ensures that even if one generator is undergoing

maintenance, the facility can still meet its energy demand using the remaining

operational generator, maintaining an uninterrupted power supply of 800 MW.

Schneider Electric's modular UPS system

excels in adaptability, with independent modules for easy scalability and

simplified maintenance. Automatic bypass features enhance reliability, ensuring

uninterrupted power to critical loads. Advanced monitoring and remote

management offer real-time insights and control for proactive maintenance.

Emphasizing energy efficiency through Eco Mode, Schneider Electric's 3+1

redundant UPS system provides reliable backup power, safeguarding critical

operations from unforeseen disruptions.

Empowered by water-cooled chiller system.

This device removes heat from a load and transfers it to the environment using a refrigeration system. This heat transfer device is the preferable cooling machine in power plants and other large-scale facilities. It is simply a system consisting of ethylene + water or water reservoir and circulation components. The cooling fluid is circulated from the reservoir to the equipment undercooling. There are also air-cooled chillers, which disperse heat-using fans.

Water cooled chiller systems have a cooling tower, thus they feature higher efficiency than air-cooled chillers. Water cooled chiller is more efficient because it condenses depending on the ambient temperature bulb temperature, which is lower than the ambient dry bulb temperature. The lower a chiller condenses, the more efficient it is. This system has several essential components including:

- Cooling towers

- Condenser water pumps

- Make-up water pumps

- Chillers

- TES reservoirs

What are the benefits of a water-cooled

chiller? These chillers also feature higher efficiency and last longer than the

mentioned alternative. Those who would like the equipment to be placed indoors

may find the water-cooled machine desirable. the Water-Cooled Chiller is ensuring

the reliability, efficiency, and sustainability of a modern data center. Its

role in managing heat, optimizing energy consumption, and providing a scalable

and reliable cooling solution highlights its indispensability in the design and

operation of contemporary data centers.

SLT Data Center HVAC (Heating, ventilation, and air conditioning) Systems

As I walked through the rows of racks in

the data center, I noticed two types of aisles – one receiving cool air and the

other expelling hot air from the IT equipment. Traditionally, the strategy was

to let the air flow without any containment, assuming that pushing cool air up

through the raised floor would reach the racks before mixing with the hot air.

However, as the heat generated by each rack increased, this uncontained

approach became inefficient. Before diving into more effective solutions, let's

first explore the concept of raised floors.

In larger data centers using air-cooled

systems, raised floors are commonly used. Cold air is sent to the underfloor

space and then supplied to IT equipment through tiny holes in specific floor

tiles. This cold air flows through the perforated tiles, enters the servers,

picks up heat, and rises above the servers. The HVAC units draw the warmed air

back into the cooling system, ensuring a consistent airflow pattern as server

racks all face the same direction.

Proper air management in data centers

requires keeping cold and hot air from mixing. It's essential that cold supply

air enters IT equipment without mixing with hot exhaust, and that heat is

efficiently returned to the cooling system without mixing with cold air. This

involves delivering cold supply air in one passage and removing warm return air

in another. Server racks are strategically arranged so that cool air flows

through them, absorbing heat before being discharged into the hot aisle. Warm

air from the hot aisle is drawn back to the cooling unit, transferring heat

from IT equipment to the cooling coil.

Cisco Application Centric Infrastructure (ACI)

Cisco ACI, short for Application Centric

Infrastructure, is a game-changer for managing SLT data center. It simplifies

things and beefs up security by taking a centralized and application-focused

approach. This means businesses can use automation and set policies to run IT

operations smoothly and speed up their digital transformation. Now, let's dive

into the nitty-gritty of measuring the return on investment (ROI) when

organizations decide to roll out Cisco ACI.

Cisco ACI is that it gives you a total view

of your data center network. That includes everything from the physical and

virtual infrastructure to applications and services. This big-picture view

helps organizations simplify how they run their networks, cut down on

complexity, and boost their ability to adapt quickly. Cisco ACI also brings in

a new way of handling networks through policies. This lets organizations set

and enforce rules across the whole network, ensuring that applications perform

consistently and stay secure, no matter where they're running.

One more cool thing about Cisco ACI is that

it plays nice with multi-cloud setups. So, if an organization uses different

cloud services, Cisco ACI lets them apply the same rules and security controls

everywhere. This is a big deal because it keeps things consistent across

various cloud platforms. Now, let's talk about the return on investment (ROI).

When you put Cisco ACI into action, the benefits go beyond just working more

efficiently. It actually helps cut down on operational costs, speeds up how

fast applications get up and running, and boosts overall productivity. Since

Cisco ACI offers a comprehensive and unified way to manage networks, it helps

organizations make the most out of their IT investments.

The SLT Data Center stands as a testament

to the pivotal role it plays in shaping the digital landscape of Sri Lanka. As

the gateway to the nation's data-driven future, the facility serves as the

backbone for telecommunications, connecting millions of users and businesses

with seamless precision. My journey into the heart of this technological hub

promised to shed light on the complexities of data management and the

innovations driving Sri Lanka's digital evolution.

The visit to SLT Data Center in Pitipana has explained the critical role such facilities play in steering a nation towards a digitally progressive future. The marriage of cutting-edge technology, environmental awareness, and unwavering security measures emphasizes the center's commitment to quality. As I step out of the Data center, I carry with me a new understanding of the unseen forces that keep our digital world in motion. The SLT Data Center stands not just as a technological powerhouse but as a symbol of Sri Lanka's readiness to embrace and shape the digital future.

0 Comments